Welcome to Vents Blog! Today, we’re diving into the nvidia/cuda:12.6.0-runtime-ubuntu24.04 Dockerfile and how it works. If you’re new to Docker and CUDA, this guide will show you an easy way to get started with CUDA on an Ubuntu 24.04 base. With this Dockerfile, you can set up CUDA quickly, so you can run applications that need GPU acceleration.

The nvidia/cuda:12.6.0-runtime-ubuntu24.04 Dockerfile is great for developers who want to use NVIDIA’s CUDA tools without installing them directly on their system. Here, you’ll learn how to create this Dockerfile, customize it, and test it. Let’s get started!

What is nvidia/cuda:12.6.0-runtime-ubuntu24.04 Dockerfile?

The nvidia/cuda:12.6.0-runtime-ubuntu24.04 Dockerfile is a special setup file that helps you run programs needing CUDA on Ubuntu 24.04. If you’re into machine learning or video editing, you may already know that these tasks need strong GPU support. This Dockerfile makes it easier to use NVIDIA’s CUDA tools without installing them directly on your computer.

In simple words, this Dockerfile is like a recipe for your Docker container. It tells Docker which CUDA tools to add and how to set up the environment. Instead of going through long installations, you can quickly get started with GPU acceleration using this Dockerfile.

Using this Dockerfile saves time, especially if you’re testing and coding different projects. You don’t need to mess with your system settings or worry about complex CUDA installations. With Docker, it’s just about running a container with the right setup, and this Dockerfile makes that happen.

Setting Up nvidia/cuda:12.6.0-runtime-ubuntu24.04 on Ubuntu

Before you start using the nvidia/cuda:12.6.0-runtime-ubuntu24.04 Dockerfile, you need Docker installed on Ubuntu. Docker allows you to run containers, which are like tiny computers inside your computer. These containers help you work with CUDA without actually installing it on your main system.

Once Docker is installed, the setup gets easier. You can pull the Docker image for CUDA from NVIDIA’s Docker Hub page. This image has all the basics you need, and you don’t have to build everything from scratch. This way, you get to save time and reduce the hassle of installation.

When your Docker setup is ready, the next step is creating a Dockerfile. This Dockerfile will have instructions on what CUDA tools to install and how to configure them. After setting up the Dockerfile, you’re ready to launch a container for your project.

Why Use a Dockerfile for CUDA Applications?

Using a Dockerfile for CUDA applications like nvidia/cuda:12.6.0-runtime-ubuntu24.04 Dockerfile has some big advantages. First, it keeps everything in a container, so you won’t mess up your system. CUDA and its libraries can sometimes be tricky to install. Docker makes it much easier because everything is stored inside a container.

Another reason to use a Dockerfile is portability. Once you create a Dockerfile, you can share it with anyone. This means your teammates can run the same CUDA setup on their systems without any issues. It’s great for teams working on the same project because everyone has the same setup.

Lastly, using Docker helps with version control. You can save different versions of your Dockerfile, so if something goes wrong, you can go back to an older setup. This makes your work more flexible and reliable.

How to Install Docker for nvidia/cuda:12.6.0-runtime-ubuntu24.04 Setup

To use the nvidia/cuda:12.6.0-runtime-ubuntu24.04 Dockerfile, you’ll need Docker installed first. Docker is free to install on Ubuntu, and it’s simple to set up. Start by updating your system with the latest package information. Then, use a few easy commands to install Docker from Ubuntu’s software repositories.

Once Docker is installed, you may want to verify it’s working correctly. Just type docker –version in your terminal, and it will show you the Docker version. This step is crucial because CUDA applications depend on a stable Docker installation to run smoothly.

You’ll also need to install NVIDIA’s Docker runtime for better GPU support. This runtime allows the Docker container to access your computer’s GPU, making it perfect for CUDA tasks. After setting this up, you’re ready to start using the Dockerfile for CUDA.

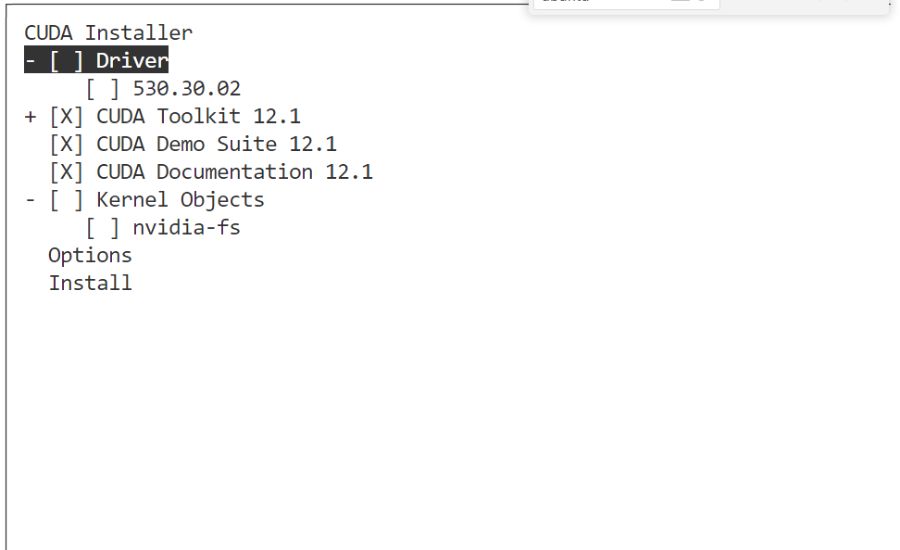

Step-by-Step Guide to Create a CUDA Dockerfile

Creating a nvidia/cuda:12.6.0-runtime-ubuntu24.04 Dockerfile is easy and only needs a few steps. First, open a new file named “Dockerfile” in any text editor. The Dockerfile will include simple commands to set up CUDA and other needed libraries for GPU acceleration.

Next, start with a base image from NVIDIA’s Docker Hub page. This base image includes CUDA and Ubuntu, so you don’t have to start from scratch. Once the base image is defined, you can add more commands for any additional packages or tools your project needs.

After writing all the commands, save the file as “Dockerfile” and place it in your project folder. With the Dockerfile ready, you can build a Docker image by running a single command in your terminal. This image is now ready to use for CUDA tasks.

Running Your First Docker Container with nvidia/cuda:12.6.0-runtime-ubuntu24.04

Now that you have the nvidia/cuda:12.6.0-runtime-ubuntu24.04 Dockerfile and Docker installed, it’s time to run your first container. A container is a virtual space where you can test and develop CUDA applications without touching your main system.

To start, use the docker build command to create an image from your Dockerfile. Once the image is built, you can run it by using the docker run command. This command opens the container, giving you a safe place to test CUDA applications.

Inside the container, you’ll have everything needed for CUDA tasks. You can run code, experiment, or even install more tools if needed. The container is like a mini-computer, and when you’re done, you can simply close it without affecting your main system.

Customizing the nvidia/cuda:12.6.0-runtime-ubuntu24.04 Dockerfile

One of the best things about the nvidia/cuda:12.6.0-runtime-ubuntu24.04 Dockerfile is how easy it is to customize. You can add extra tools, libraries, or other software to make it fit your project perfectly. Customizing allows you to create a unique setup for your specific needs.

To start, open the Dockerfile and add new commands for any packages or libraries you need. For example, if your CUDA project requires Python, you can add a line to install it. Each line in the Dockerfile will add something new to your container.

When you’re happy with your changes, save the Dockerfile and rebuild the Docker image. Your new image will have all the custom features you added, making it a perfect fit for your CUDA work. This flexibility helps you avoid redoing setups and keeps everything in one container.

Testing CUDA with nvidia/cuda:12.6.0-runtime-ubuntu24.04

After setting up the nvidia/cuda:12.6.0-runtime-ubuntu24.04 Dockerfile, it’s important to test if CUDA is working correctly. Testing ensures that your container has proper access to your GPU and can handle CUDA tasks. A quick way to test is by running a sample CUDA application.

Once you start the container, you can run a basic CUDA program to check performance. If the code runs smoothly and the GPU is detected, your setup is complete. If there are issues, you might need to check Docker settings or GPU compatibility.

Testing saves time and helps you catch problems early. By confirming CUDA works in the container, you can be confident your Docker environment is ready for real projects. This step is key to a smooth CUDA experience.

Do You Know: Download-App-Nordvpn-6-45-10-3-Offline-Installer

Common Issues and Fixes for CUDA Dockerfiles

Working with nvidia/cuda:12.6.0-runtime-ubuntu24.04 Dockerfile can sometimes lead to small issues. One common problem is that the container may not recognize the GPU. This can happen if the NVIDIA runtime is not properly set up. Reinstalling the NVIDIA Docker runtime often fixes this problem.

Another issue is related to missing libraries. If your CUDA application needs certain libraries, you may need to add them manually to the Dockerfile. Adding these libraries makes sure everything runs without errors during processing.

Sometimes, containers may be slow or have performance issues. This can be due to limited GPU resources allocated to Docker. Adjusting Docker’s settings can improve performance. Fixing these common issues keeps your Dockerfile ready for smooth CUDA work.

Conclusion

Setting up and using the nvidia/cuda:12.6.0-runtime-ubuntu24.04 Dockerfile can seem tricky at first, but it makes working with CUDA a lot easier. With Docker, you don’t have to install everything directly on your computer. Instead, you get a container that’s ready to handle CUDA tasks without any mess. This guide has shown you how to set up, customize, and test your Dockerfile, so now you can start using it for your projects.

Using Docker with CUDA also saves you time and gives you flexibility. You can share your Dockerfile with others or keep different versions for different projects. This way, everything is organized, and you don’t have to worry about complicated setups. We hope this guide helps you feel confident about using Docker and CUDA together. Happy coding!

Latest Blog: Hammond-Model-8014m-Finish-Wood-Categories-Organs-Year-70s

FAQs

Q: What is the nvidia/cuda:12.6.0-runtime-ubuntu24.04 Dockerfile?

A: It’s a setup file that lets you run CUDA applications on an Ubuntu 24.04 base using Docker. This makes it easy to work with GPU-accelerated programs without installing CUDA directly on your computer.

Q: Why should I use a Dockerfile for CUDA?

A: Using a Dockerfile for CUDA helps keep everything in a container, making it easier to set up and share. It saves time and avoids complex installations on your main system.

Q: Do I need Docker installed to use nvidia/cuda:12.6.0-runtime-ubuntu24.04 Dockerfile?

A: Yes, you need Docker to run this Dockerfile. Docker creates the container environment where your CUDA tools and applications can run smoothly.

Q: How can I test if CUDA is working in my Docker container?

A: You can test CUDA by running a sample CUDA program in the container. If the program runs without issues and detects your GPU, your setup is correct.

Q: Can I customize the nvidia/cuda:12.6.0-runtime-ubuntu24.04 Dockerfile?

A: Yes, you can customize it by adding commands in the Dockerfile. This allows you to include extra tools or libraries that your project might need.